I. Introduction

In Part I, we showed that public availability does not mean public domain place—especially in copyright law. Technological leaps—such as the advent of generative AI—can change the nature of harm. Copyright law should keep pace.

That was the starting point for my narrative addressing the legal doctrine heavily relied upon by AI developers: fair use under 17 U.S.C. § 107. In Part 1, we touched on Factor 4 of the fair use analysis—the effect of the use upon the potential market for or value of the copyrighted work. In this Part 2, we turn to the remaining statutory factors: (1) the purpose and character of the use, (2) the nature of the copyrighted work, and (3) the amount and substantiality of the portion used. AI training is often compared to the “intermediate copying” line of cases, where courts have permitted the reproduction of entire works—not for expressive substitution, but to enable some further analysis, transformation, or functionality. The legal questions may look familiar. But the stakes are new. So are the facts.

II. Copy First, Ask Forgiveness Later

Before any discussion of fair use, we must start with the legal basics. Under U.S. copyright law, infringement requires only two elements:

- Ownership of a valid copyright; and

- Actionable copying of original elements of the work.

The first prong is usually undisputed.1 As noted in Part I, copyright protection attaches automatically to original works of authorship fixed in any tangible medium—whether they are registered, watermarked, or simply posted online. If it’s original and fixed, whether in print or digitally, it’s protected.

The second prong—copying—has generally been more contested. Courts look at whether the defendant had access to the work and whether it copied protected expression in a substantial way. But contrary to popular understanding, this prong is presumptively satisfied in the context of modern AI training.

Standard training practices by developers of large language models (LLMs) and other generative AI systems involve two practically unavoidable facts:

- The works are copied. As discussed in detail in Part I, AI models are not trained by passively “reading” material hosted online in real time. The training process requires downloading or scraping the content and reproducing it within the developer’s own infrastructure—local servers or cloud-based clusters—for storage, processing, and repeated access. This copying is neither incidental nor transitory —it is a technical requirement for training.2

- The copying is extensive and substantial. Rather than ingesting isolated snippets, AI systems typically consume entire corpora—including books, blog posts, journalistic content, images, and more. These works are retained, vectorized, and used to generate synthetic outputs that often mimic style, structure, and market function. Whether or not a final AI output reproduces a work verbatim or substantially similar to an original is not the test. The law asks whether protected expression was copied without authorization, and whether that reproduction was substantial. In the training context, it almost always is as a preliminary step.

Unlike in human learning, in the development of large AI models, these two elements of copyright infringement are not close questions—they are presumptively met.3 The only real legal issue is whether the copying can be justified as fair use under 17 U.S.C. § 107.4 That question lies at the heart of the first wave of litigation now unfolding around generative AI developers.

III. The Fair Use Defense

Virtually all substantive legal defenses raised by AI developers stem from principles of fair use.

“The fair use doctrine … requires a case-by-case analysis” and “is not to be simplified with bright-line rules.”

Campbell v. Acuff-Rose Music, Inc.5

In Part 1, we touched on Factor 4 of the fair use analysis: the effect of the use on the market for the original work. In today’s litigation landscape, courts are only beginning to grapple with how to measure that harm—especially when AI outputs are synthetic, dynamic, and not easily traced to any single source. But Factor 4, though consequential, is only one piece of the statutory text.

So what’s your rate for stopping the bots?

To determine whether generative AI training qualifies as fair use, the remaining statutory factors must be addressed on their own terms.

A. Factors 1 & 2: AI developers have transformed the defense—Not the use

At the core of Factor 1 of the fair use analysis is a deceptively simple word: transformative. AI developers routinely rely on this concept to justify the ingestion of copyrighted materials at scale, framing their training processes as “intermediate copying” that serves a new and different purpose. But that framing borrows the language of transformation while bypassing its actual meaning.

1. AI developers reframe training as “intermediate”

AI developers “transformative use” defense typically hinges on analogizing LLM development to a recognized class of fair use cases known as “intermediate copying”—instances where courts have permitted the reproduction of entire works, not for expressive reuse, but to enable a different function or utility.6

The cornerstone case is Authors Guild v. Google, where scanning books to enable search functionality was held to be transformative.7 Similarly, in Sony v. Universal, the Supreme Court upheld time-shifting as fair use, reasoning that it allowed for personal convenience without displacing the original market.8 Other cases, such as Perfect 10 v. Amazon9 and Fox News Network v. TVEyes,10 have likewise carved out protections for backend or technical uses that do not replicate the original’s expressive content.

AI developers argue that model training qualifies under this lineage. They describe the process as building a tool that “learns” from—but doesn’t replicate—underlying works. Some frame it as passive infrastructure, emphasizing that models do not store or display works in human-readable form. They claim outputs are “new” works, statistically generated—not copies.

But what matters is not how the works are represented internally, but how they are used. And in AI, the output often serves the same expressive function as the original.

[cont’d ↗]

2. Training isn’t transformative—It’s extractive and expressive

AI developers describe training as functional preprocessing with no expressive output. But that framing skirts the core fact: these systems are trained on expressive works to produce expressive works. Unlike in intermediate copying caselaw, the models are taught to replicate tone, structure, and style.

Courts have long held that fair use is less likely to apply when the original works are creative, rather than factual or functional in nature.11 That’s Factor 2. And in the AI context, there’s a second dimension: not just that the inputs are expressive—but the outputs are, too.

While Factor 2 typically examines the nature of the original work, courts weigh all four factors holistically. When both the input and output are creative, the rationale behind Factor 2 cuts even more sharply against fair use.12

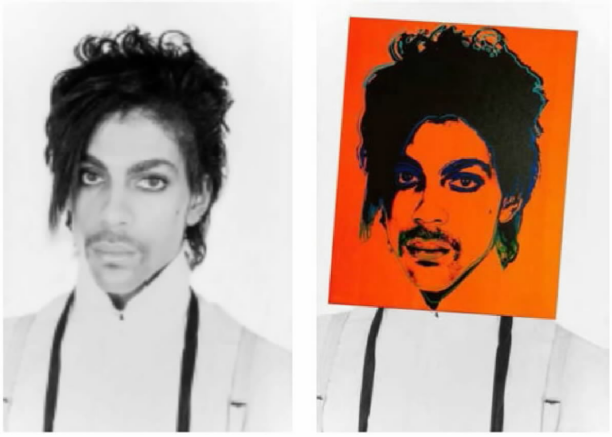

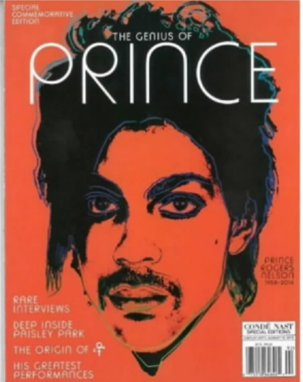

This principle was at the heart of the Supreme Court’s holding in Warhol v. Goldsmith, which found no transformative use where the output had the same commercial and expressive purpose as the original.13

Andy Warhol’s orange silkscreen portrait of Prince superimposed on the copyright plaintiff’s original portrait photograph then licensed for a magazine cover.

3. The output may look transformative—But that’s not the test

Even though generative AI outputs often look different from their training data, that alone does not make the use transformative. As the Supreme Court confirmed in Warhol v. Goldsmith, a change in appearance or format is insufficient when the underlying commercial and expressive purpose remains the same.14

AI models may convert expressive content into abstract vectors, but their purpose remains consistent: to produce new expressive works that fulfill the same market role as the originals.

Under black-letter law, what matters is not whether the reproduction is visible or literal15 —but whether it is fixed, unauthorized, and used in a way that exploits the original’s protected expression.16

As Campbell reaffirmed, novelty or technological sophistication does not make a use transformative unless it serves a new and different expressive purpose.17

Here, LLM training absorbs expressive content for the very purpose of generating new expressive content. That is not transformation—it is substitution.

B. The “amount and substantiality” of the portion used (Factor 3)

Under Factor 3, courts examine both the quantity and qualitative value of the portion copied in relation to the copyrighted work as a whole.18 Unsurprisingly, wholesale copying of entire works typically weighs against fair use—unless justified by a compelling transformative purpose.19 AI developers concede that their systems ingest complete works during training, but they argue that this copying is excused on the grounds that it is functionally necessary. But even if LLMs do not store works in human-readable form, the copying is fixed, indispensable, and geared toward expressive replication.

Necessity alone does not justify total copying. In Google, copying was necessary to enable search—a function distinct from expressive use. In contrast, generative AI’s “necessity” is aimed at reproducing expressive capacity itself—precisely what copyright exists to protect.

Once again, they turn to the intermediate copying cases to make their case.20 AI developers argue that the same logic applies here: the copying is total, yes—but it is necessary, functional, and not directly substitutive.

But that argument glosses over two critical differences. First, in Google and Sony, the complete copying was tolerated because the use was non-expressive. Search and time-shifting did not involve repurposing creative content for new creative expression. Generative AI systems, by contrast, copy expressive works for the express purpose of training a system to produce more expressive works. That is a fundamentally different use—one far closer to traditional derivative works than to intermediate processing.

Second, the scope of copying in AI training is not merely extensive—it is expressive at its core. The systems ingest not just factual summaries or snippets but the full narrative arc, stylistic choices, creative voice, and structural patterns of the originals. They learn to mimic the expressive elements that are at the heart of what copyright protects.21 Courts have repeatedly emphasized that copying even a small portion of a work may weigh against fair use when that portion captures the “heart” of the work.22 Here, the copying goes well beyond the heart—it takes the whole circulatory system.

In this context, the industry’s refrain that “everyone copies everything” becomes self-defeating: the ubiquity of the copying only underscores how foundational and deliberate it is. This is not incidental copying—it is the product’s foundation. Factor 3, therefore, does not merely disfavor fair use—it highlights how far removed this practice is from the narrow and functional copying permitted in intermediate use cases.

IV. Conclusion: Not Fair Use—At Least Under Current Doctrine

The fair use defense is intentionally flexible, but it is not without boundaries. And the claim that generative AI training qualifies as “transformative use” presses those boundaries in ways that courts have not yet squarely resolved. This Part II has focused on the first three statutory factors—purpose and character of the use (Factor 1), the nature of the copyrighted work (Factor 2), and the amount and substantiality of the portion used (Factor 3)—each of which presents significant challenges to the fair use defense in this context.

Factor 1 is undercut when the use is expressive in both intent and effect—aimed not at enabling functionality, but at generating new creative output based on the original works.

Factor 2 cuts against fair use when the underlying works are highly creative, and, by extension, when the outputs, likewise, occupy the same expressive domain.

Factor 3 presents the most difficult challenge, because the copying is not minimal, targeted, or incidental. It is comprehensive by design—fundamental to how generative models function.

Unlike prior fair use cases involving search engines, metadata indexing, or time-shifting for private use, the use here is not background or infrastructural. It is expressive replication at scale.

Courts may yet develop new frameworks to address the unique challenges posed by AI. But under the existing contours of fair use doctrine, the case for treating LLM training—and its reliance on expressive works without permission or licensing—as transformative raises concerns more akin to appropriation than fair use.

© 2025 Ko IP & AI Law PLLC

In Part 3, we’ll return to Factor 4, but with a new lens: Does fair use ask only about harm to the original work and individual licensing markets for it and/or should it be so limited? This question may decide the future of copyright in the age of AI. Now available, click here.

- Interestingly enough, this will actually not always be the case going forward—as AI is used to assist the creative process more and more, this will give rise to challenges as to the copyrightability of creative works that result. See U.S. Copyright Office, Copyright Registration Guidance: Works Containing Materials Generated by Artificial Intelligence, 88 Fed. Reg. 16190, 16192 (Mar. 16, 2023), available here; see also Jim W. Ko & Paul R. Michel, Testing the Limits of the IP Regimes: Unique Challenges of Artificial Intelligence, at 438–40 (“According to current USCO guidance, copyright protections are available only for the “human-authored aspects” of GenAI-assisted works of authorship.”), available here. ↩︎

- See also Ko IP & AI Law, Data Is the New Oil: How to License Datasets for AI Without Losing Control (Part 1 of 2) (explaining that while proprietary data licensors would ideally provide cloud-access only to LLM providers for various security reasons, the reality is that LLM providers generally require that data be delivered as a local copy to maintain the processing speed necessary to run their massive models), available here. ↩︎

- While rare, some technical exceptions to wholesale reproduction exist. For example, it is technically conceivable that a developer could build a model that accesses third-party content via real-time API calls or federated learning frameworks, which may avoid storing permanent local copies. However, such architectures are far less efficient for training, particularly at scale, and are not representative of how major commercial models are built. See Complaint ¶¶ 82–97, The New York Times Co. v. Microsoft Corp., No. 1:23-cv-11195 (S.D.N.Y. Dec. 27, 2023) (describing Microsoft and Open AI’s alleged unauthorized use and copying of the NYT’s content, starting with the collecting and storing of voluminous content to create training datasets); Complaint ¶¶ 35–48, Getty Images (US), Inc. v. Stability AI, Inc., No. 1:23-cv-00135 (D. Del. Feb. 3, 2023) (describing Stability AI’s alleged infringing of Getty’s image’s copyrights, starting with the unauthorized copying of billions of Getty’s images); Complaint ¶ 57, Andersen v. Stability AI Ltd., No. 3:23-cv-00201 (N.D. Cal. Jan. 13, 2023) (alleging Stability “scraped, and thereby copied over five billion images from websites as the Training Images used as training data for Stable Diffusion”).

There are a few notable cases of licensed training data—for instance, OpenAI’s reported licensing agreement with Shutterstock for use of its image archive. See Shutterstock Expands Partnership with OpenAI, Signs New Six-Year Agreement to Provide High-Quality Training Data, Shutterstock (July 11, 2023), available here. There are reportedly a few open-source projects limit training to Creative Commons or public domain content. But these remain rare outliers. The dominant paradigm in industry LLM development continues to involve massive-scale scraping and internal copying of unlicensed, copyright-protected material, often without attribution or consent.

Under black-letter law, “[c]opyright protection includes the exclusive right . . . to reproduce the copyrighted work in copies.” 17 U.S.C. § 106(1). Where such reproduction occurs without permission, particularly in bulk, it satisfies the infringement threshold—even if no specific output is later found to be substantially similar. ↩︎ - 17 U.S.C. § 107 (Limitations on exclusive rights: Fair use) states in its entirety:

Notwithstanding the provisions of sections 106 and 106A, the fair use of a copyrighted work, including such use by reproduction in copies or phonorecords or by any other means specified by that section, for purposes such as criticism, comment, news reporting, teaching (including multiple copies for classroom use), scholarship, or research, is not an infringement of copyright. In determining whether the use made of a work in any particular case is a fair use the factors to be considered shall include—

(1) the purpose and character of the use, including whether such use is of a commercial nature or is for nonprofit educational purposes;

(2) the nature of the copyrighted work;

(3) the amount and substantiality of the portion used in relation to the copyrighted work as a whole; and

(4) the effect of the use upon the potential market for or value of the copyrighted work.

The fact that a work is unpublished shall not itself bar a finding of fair use if such finding is made upon consideration of all the above factors. ↩︎ - 510 U.S. 569, 577 (1994). ↩︎

- See Authors Guild v. Google, Inc., 804 F.3d 202, 207–08 (2d Cir. 2015); Sony Corp. of Am. v. Universal City Studios, Inc., 464 U.S. 417 (1984). ↩︎

- Authors Guild, 804 F.3d at 207–09 (finding that digitizing entire books to create a searchable index constituted a transformative use that “adds something new” with “a further purpose”). ↩︎

- Sony, 464 U.S. at 454–55 (holding that noncommercial, private time-shifting for later viewing was fair use because it did not materially impair the market for copyrighted works). ↩︎

- Perfect 10, Inc. v. Amazon.com, Inc., 508 F.3d 1146, 1165–68 (9th Cir. 2007) (finding Google’s use of thumbnail images in image search to be a transformative use). ↩︎

- Fox News Network, LLC v. TVEyes, Inc., 883 F.3d 169, 176–77 (2d Cir. 2018) (finding that time-stamped video clips made searchable for monitoring purposes could qualify as transformative, though limits applied). ↩︎

- Harper & Row Publishers, Inc. v. Nation Enters., 471 U.S. 539, 563 (1985) (“The law generally recognizes a greater need to disseminate factual works than works of fiction or fantasy.”). ↩︎

- See id. ↩︎

- Andy Warhol Found. for the Visual Arts, Inc. v. Goldsmith, 598 U.S. 508, 523–25 (2023) (reaffirming that expressive purpose of both the original and the secondary work is relevant under Factor 1). ↩︎

- Id. ↩︎

- See 17 U.S.C. § 106(1). ↩︎

- See TVEyes, Inc., 883 F.3d at 181 (holding that the intentional recording, copying, and retaining of audiovisual content to enable another function is by itself an infringement). ↩︎

- Campbell, 510 U.S. at 579. ↩︎

- See 17 U.S.C. § 107(3) (“[In determining whether the use made of a work in any particular case is a fair use the factors to be considered shall include] the amount and substantiality of the portion used in relation to the copyrighted work as a whole”); Campbell, 510 U.S. at 586. ↩︎

- See Harper & Row, 471 U.S. at 564–66 (copying 300 words from an unpublished manuscript was not fair use because it captured the “heart” of the work). ↩︎

- See supra Sec. III.A.1. ↩︎

- See Campbell, 510 U.S. at 587–88 (noting that even extensive copying may be permissible for transformative purposes, but cautioning that substantial use weighs against fair use when not justified). ↩︎

- Harper & Row, 471 U.S. at 565–66. ↩︎

Leave a Reply